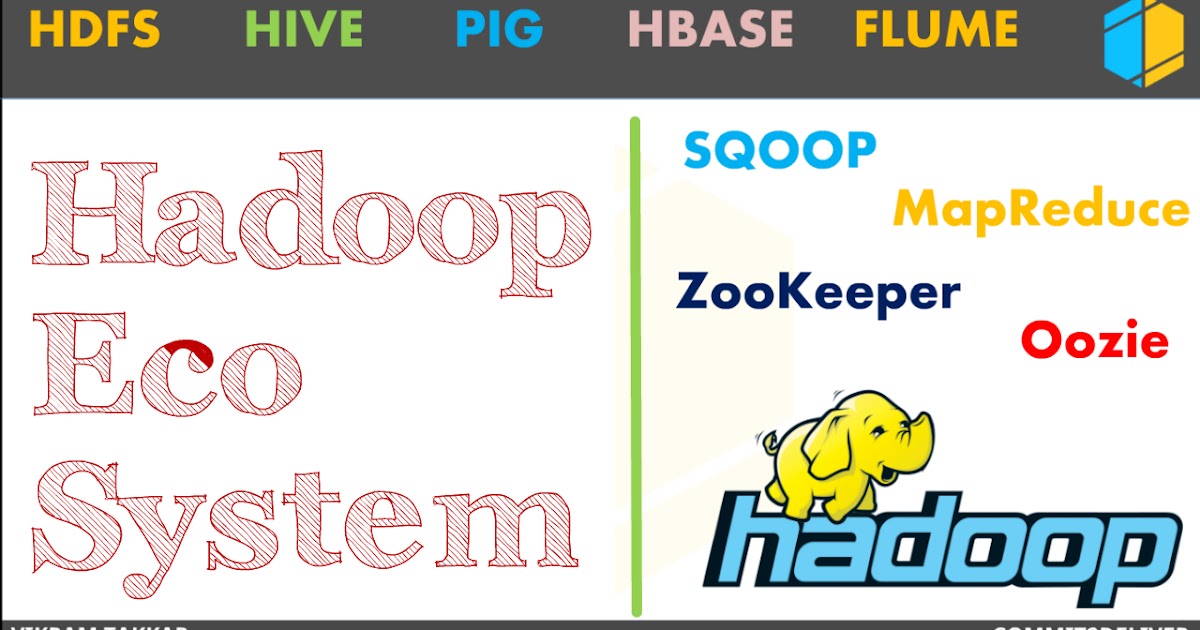

What is the difference between Hadoop and HDFS?

HBase vs Hadoop HDFS:

- Basically, Hadoop is a solution for Big Data for large data storage and data processing. ...

- Coming to HBase, it is Not OnlySQL (NoSQL) database that runs on top of the Hadoop cluster. ...

- In Hadoop HDFS we can store unstructured, semi-structured, and structured data.

What is the difference between Hadoop and Hive?

- Apache Hive helps in analyzing the huge dataset stored in the Hadoop file system (HDFS) and other compatible file systems.

- Hive QL – For querying data stored in Hadoop Cluster.

- Exploits the Scalability of Hadoop by translation.

- Hive is NOT a Full Database.

- It does Not provide record-level updates.

- Hadoop is Batch Oriented System.

When to use Hadoop, HBase, hive and pig?

- Hive vs Pig

- What is Big Data and Hadoop?

- HIVE Hadoop

- PIG Hadoop

- Apache Pig Use Cases -Companies Using Apache Pig

- Difference between Pig and Hive

- Pig vs. Hive

- Pig vs. Hive- Performance Benchmarking

Are HDF cabinets good?

Smooth Finish. HDF is made from very fine particles, which means there is no noticeable grain. This can be an advantage for your cabinet project especially if you're painting and looking for that sleek, flawless finish. When it comes to painted cabinet doors, we recommend HDF over solid wood.

What is the Hadoop distributed file system designed to handle quizlet?

Hadoop was designed to handle petabytes and extabytes of data distributed over multiple nodes in parallel. Allowing Big Data to be processed in memory and distributed across a dedicated set of nodes can solve complex problems in near real time with highly accurate insights.

What is HDFS used for?

HDFS is a distributed file system that handles large data sets running on commodity hardware. It is used to scale a single Apache Hadoop cluster to hundreds (and even thousands) of nodes. HDFS is one of the major components of Apache Hadoop, the others being MapReduce and YARN.

What is HDFS How does it handle big data?

HDFS is made for handling large files by dividing them into blocks, replicating them, and storing them in the different cluster nodes. Thus, its ability to be highly fault-tolerant and reliable. HDFS is designed to store large datasets in the range of gigabytes or terabytes, or even petabytes.

What are the features of HDFS?

Hadoop HDFS has the features like Fault Tolerance, Replication, Reliability, High Availability, Distributed Storage, Scalability etc. All these features of HDFS in Hadoop will be discussed in this Hadoop HDFS tutorial.

What is a Hadoop distribution?

Hadoop distributions are used to provide scalable, distributed computing against on-premises and cloud-based file store data. Distributions are composed of commercially packaged and supported editions of open-source Apache Hadoop-related projects.

How does distributed file system work?

A Distributed File System (DFS) is a file system that is distributed on multiple file servers or multiple locations. It makes the programs to access or to store isolated files with the local ones, allowing programmers to access files from any network or computer. It manages files and folders on different computers.

How much data can Hadoop handle?

HDFS can easily store terrabytes of data using any number of inexpensive commodity servers. It does so by breaking each large file into blocks (the default block size is 64MB; however the most commonly used block size today is 128MB).

How big data problems are handled by Hadoop?

It can handle arbitrary text and binary data. So Hadoop can digest any unstructured data easily. We saw how having separate storage and processing clusters is not the best fit for big data. Hadoop clusters, however, provide storage and distributed computing all in one.

How is data distributed in Hadoop?

Hadoop is considered a distributed system because the framework splits files into large data blocks and distributes them across nodes in a cluster. Hadoop then processes the data in parallel, where nodes only process data it has access to.

What are the characteristics of a distributed file system?

Desirable features of a distributed file system:Transparency. - Structure transparency. ... User mobility. Automatically bring the user s environment (e.g. user s home directory) to the node where the user logs in. ... Performance. ... Simplicity and ease of use. ... Scalability. ... High availability. ... High reliability. ... Data integrity.More items...

What are the main goals of Hadoop?

Hadoop (hadoop.apache.org) is an open source scalable solution for distributed computing that allows organizations to spread computing power across a large number of systems. The goal with Hadoop is to be able to process large amounts of data simultaneously and return results quickly.

What is HDFS?

HDFS stands for Hadoop Distributed File System. The function of HDFS is to operate as a distributed file system designed to run on commodity hardware. HDFS is fault-tolerant and is designed to be deployed on low-cost hardware. HDFS provides high throughput access to application data and is suitable for applications that have large data sets and enables streaming access to file system data in Apache Hadoop.

What are some considerations with HDFS?

By default, HDFS is configured with 3x replication which means datasets will have 2 additional copies. While this improves the likelihood of localized data during processing, it does introduce an overhead in storage costs.

What is the history of HDFS?

The design of HDFS was based on the Google File System. It was originally built as infrastructure for the Apache Nutch web search engine project but has since become a member of the Hadoop Ecosystem. HDFS is used to replace costly storage solutions by allowing users to store data in commodity hardware vs proprietary hardware/software solutions. Initially, MapReduce was the only distributed processing engine that could use HDFS, however, other technologies such as Apache Spark or Tez can now operate against it. Other Hadoop data services components like HBase and Solr also leverage HDFS to store its data.

What is HDFS in Hadoop?

Now we think you become familiar with the term file system so let’s begin with HDFS. HDFS (Hadoop Distributed File System) is utilized for storage permission is a Hadoop cluster. It mainly designed for working on commodity Hardware devices (devices that are inexpensive), working on a distributed file system design. HDFS is designed in such a way that it believes more in storing the data in a large chunk of blocks rather than storing small data blocks. HDFS in Hadoop provides Fault-tolerance and High availability to the storage layer and the other devices present in that Hadoop cluster.

What are the features of HDFS?

Some Important Features of HDFS (Hadoop Distributed File System) 1 It’s easy to access the files stored in HDFS. 2 HDFS also provide high availibility and fault tolerance. 3 Provides scalability to scaleup or scaledown nodes as per our requirement. 4 Data is stored in distributed manner i.e. various Datanodes are responsible for storing the data. 5 HDFS provides Replication because of which no fear of Data Loss. 6 HDFS Provides High Reliability as it can store data in the large range of Petabytes. 7 HDFS has in-built servers in Name node and Data Node that helps them to easily retrieve the cluster information. 8 Provides high throughput.

What is DFS?

DFS stands for the distributed file system, it is a concept of storing the file in multiple nodes in a distributed manner. DFS actually provides the Abstraction for a single large system whose storage is equal to the sum of storage of other nodes in a cluster.

What is a name node in Hadoop?

1. NameNode: NameNode works as a Master in a Hadoop cluster that Guides the Datanode (Slaves). Namenode is mainly used for storing the Metadata i.e. nothing but the data about the data. Meta Data can be the transaction logs that keep track of the user’s activity in a Hadoop cluster.

How does a data node work in Hadoop?

2. DataNode: DataNodes works as a Slave DataNodes are mainly utilized for storing the data in a Hadoop cluster, the number of DataNodes can be from 1 to 500 or even more than that, the more number of DataNode your Hadoop cluster has More Data can be stored. so it is advised that the DataNode should have High storing capacity to store a large number of file blocks. Datanode performs operations like creation, deletion, etc. according to the instruction provided by the NameNode.

What is system failure in Hadoop?

System Failure: As a Hadoop cluster is consists of Lots of nodes with are commodity hardware so node failure is possible, so the fundamental goal of HDFS figure out this failure problem and recover it.

What is the simple coherency model in Hadoop?

5. Simple Coherency Model: A Hadoop Distributed File System needs a model to write once read much access for Files. A file written then closed should not be changed, only data can be appended. This assumption helps us to minimize the data coherency issue. MapReduce fits perfectly with such kind of file model.

What is HDFS?

HDFS is a distributed file system that handles large data sets running on commodity hardware. It is used to scale a single Apache Hadoop cluster to hundreds (and even thousands) of nodes. HDFS is one of the major components of Apache Hadoop, the others being MapReduce and YARN. HDFS should not be confused with or replaced by Apache HBase, which is a column-oriented non-relational database management system that sits on top of HDFS and can better support real-time data needs with its in-memory processing engine.

What is HDFS in streaming?

HDFS is intended more for batch processing versus interactive use, so the emphasis in the design is for high data throughput rates, which accommodate streaming access to data sets.

Why is redundancy important in Hadoop?

The redundancy also allows the Hadoop cluster to break up work into smaller chunks and run those jobs on all the servers in the cluster for better scalability. Finally, you gain the benefit of data locality, which is critical when working with large data sets.

What is HDFS?

Hadoop Distributed File System is a fault-tolerant data storage file system that runs on commodity hardware. It was designed to overcome challenges traditional databases couldn’t. Therefore, its full potential is only utilized when handling big data.

How does HDFS work?

HDFS divides files into blocks and stores each block on a DataNode. Multiple DataNodes are linked to the master node in the cluster, the NameNode. The master node distributes replicas of these data blocks across the cluster. It also instructs the user where to locate wanted information.

What is HDFS in Apache?

HDFS (Hadoop Distributed File System) is a vital component of the Apache Hadoop project. Hadoop is an ecosystem of software that work together to help you manage big data. The two main elements of Hadoop are:

What is the master node in HDFS?

HDFS has a master-slave architecture. The master node is the NameNode, which manages over multiple slave nodes within the cluster, known as DataNodes.

Why do companies use Hadoop?

They use Hadoop to track and analyze the data collected to help plan future inventory, pricing, marketing campaigns, and other projects.

Can you change the number of replicas in Hadoop?

You can change the number of replicas, however, it is not advisable to go under three. The replicas should be distributed in accordance with Hadoop’s Rack Awareness policy which notes that: The number of replicas has to be larger than the number of racks. One DataNode can store only one replica of a data block.

Is a file system reliable?

It is reliable. The file system stores multiple copies of data in separate systems to ensure it is always accessible.

What is Hadoop distributed file system?

Hadoop Distributed File System (HDFS) is a distributed file system which is designed to run on commodity hardware. Commodity hardware is cheaper in cost. Since Hadoop requires processing power of multiple machines and since it is expensive to deploy costly hardware, we use commodity hardware. When commodity hardware is used, failures are more ...

Why is HDFS important?

HDFS provides high throughput access to the data stored. So it is extremely useful when you want to build applications which require large data sets. HDFS was originally built as infrastructure layer for Apache Nutch.

Is HDFS a slave?

It is now pretty much part of Apache Hadoop project. HDFS has master/slave architecture. In this architecture, one of the machines will be designated as a master node (or name node). Every other machine would be acting as slave (or data node).

What Is DFS?

Why We Need DFS?

Some Important Features of HDFS

- It’s easy to access the files stored in HDFS.

- HDFS also provides high availability and fault tolerance.

- Provides scalability to scaleup or scaledown nodes as per our requirement.

- Data is stored in distributed manner i.e. various Datanodes are responsible for storing the data.

HDFS Storage Daemon’s

- As we all know Hadoop works on the MapReduce algorithm which is a master-slave architecture, HDFS has NameNode and DataNodethat works in the similar pattern. 1. NameNode(Master) 2. DataNode(Slave) 1. NameNode: NameNode works as a Masterin a Hadoop cluster that Guides the Datanode(Slaves). Namenode is mainly used for storing the Metadata i.e. nothing but the data a…

Objectives and Assumptions of HDFS

- 1. System Failure: As a Hadoop cluster is consists of Lots of nodes with are commodity hardware so node failure is possible, so the fundamental goal of HDFS figure out this failure problem and recover it. 2. Maintaining Large Dataset: As HDFS Handle files of size ranging from GB to PB, so HDFS has to be cool enough to deal with these very large dat...