Violating Linear Regression Assumptions: A guide on what not to do

- Linear relationship. Due to the parametric nature of linear regression, we are limited by the straight line relationship between X and Y.

- Additive relationship between dependent variables. This is perhaps the most violated assumption, and the primary reason why tree models outperform linear models on a huge scale.

- Autocorrelation. ...

- Collinearity. ...

What are the four assumptions of linear regression?

There are four assumptions associated with a linear regression model:

- Linearity: The relationship between X and the mean of Y is linear.

- Homoscedasticity: The variance of residual is the same for any value of X.

- Independence: Observations are independent of each other.

- Normality: For any fixed value of X, Y is normally distributed.

What are the assumptions of regression?

What are the four assumptions of regression?

- Linear relationship: There exists a linear relationship between the independent variable, x, and the dependent variable, y.

- Independence: The residuals are independent.

- Homoscedasticity: The residuals have constant variance at every level of x.

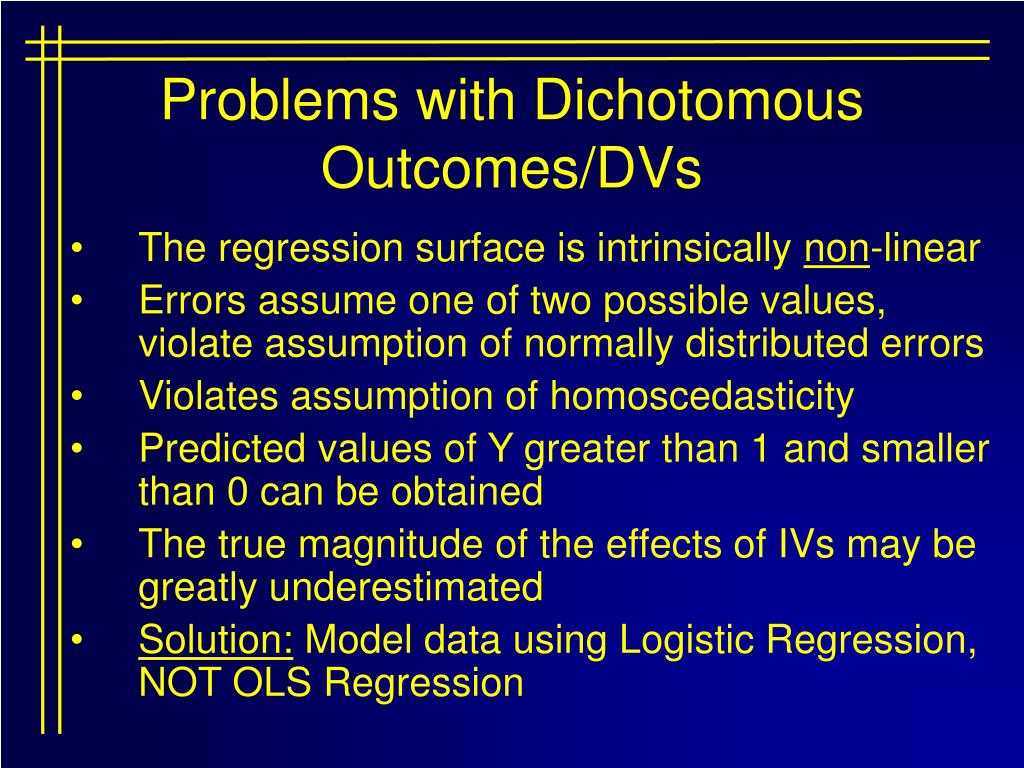

What are the assumptions of logistic regression?

- The logistic regression assumes that there is minimal or no multicollinearity among the independent variables.

- The Logistic regression assumes that the independent variables are linearly related to the log of odds.

- The logistic regression usually requires a large sample size to predict properly.

Why does Model 4 violate the no endogeneity assumption?

Does multicollinearity affect prediction?

How do you tell if the assumptions of regression are violated?

0:116:11Violating Regression Assumptions - YouTubeYouTubeStart of suggested clipEnd of suggested clipThe first one that I want to talk about is a population mean of zero. This shouldn't happen so if weMoreThe first one that I want to talk about is a population mean of zero. This shouldn't happen so if we are actually using the ordinary least-squares equation meaning we have a best fit line then the

What happens when linear regression assumptions are violated?

Similar to what occurs if assumption five is violated, if assumption six is violated, then the results of our hypothesis tests and confidence intervals will be inaccurate. One solution is to transform your target variable so that it becomes normal. This can have the effect of making the errors normal, as well.

What are some assumptions made about errors in a regression equation?

There are four assumptions associated with a linear regression model: Linearity: The relationship between X and the mean of Y is linear. Homoscedasticity: The variance of residual is the same for any value of X. Independence: Observations are independent of each other.

What can go wrong with regression analysis?

Know the main issues surrounding other regression pitfalls, including overfitting, excluding important predictor variables, extrapolation, missing data, and power and sample size.

What is violation assumption?

a situation in which the theoretical assumptions associated with a particular statistical or experimental procedure are not fulfilled.

What happens when assumptions violate?

Violations of the assumptions of your analysis impact your ability to trust your results and validly draw inferences about your results. For a brief overview of the importance of assumption testing, check out our previous blog. When the assumptions of your analysis are not met, you have a few options as a researcher.

What is a violation of the independence assumption?

One of the assumptions of most tests is that the observations are independent of each other. This assumption is violated when the value of one observation tends to be too similar to the values of other observations.

What are the assumptions of error term?

The error term ( ) is a random real number i.e. may assume any positive, negative or zero value upon chance. Each value has a certain probability, therefore error term is a random variable. The mean value of is zero, i.e E ( μ i ) = 0 i.e. the mean value of is conditional upon the given is zero.

Which assumptions are important to consider while implementing regression technique?

Let's look at the important assumptions in regression analysis: There should be a linear and additive relationship between dependent (response) variable and independent (predictor) variable(s).

What are the limitations to linear regression?

The Disadvantages of Linear RegressionLinear Regression Only Looks at the Mean of the Dependent Variable. Linear regression looks at a relationship between the mean of the dependent variable and the independent variables. ... Linear Regression Is Sensitive to Outliers. ... Data Must Be Independent.

What is the main problem with linear regression?

Main limitation of Linear Regression is the assumption of linearity between the dependent variable and the independent variables. In the real world, the data is rarely linearly separable. It assumes that there is a straight-line relationship between the dependent and independent variables which is incorrect many times.

How can you prevent regression problems?

What Can Help?Automate regression testing to reduce manual work.Rotate the team and avoid having one person do the same work over and over again.Educate testers about the importance of regression testing and the long-term contribution to the quality.Make regression testing metrics very visible to team and management.More items...

What are the consequences of violating linear regression assumptions?

Answer (1 of 6): No real data will conform exactly to linear regression assumptions. Some violations make the results worthless, others are usually trivial. If your data points are close to independent; and there are no obvious patterns in the data or large outliers; and you have at least 30 obs...

4. Violation of the CLRM Assumption.pdf - Course Hero

Violation of Assumption 2: heteroscedasticity @Elisabetta Pellini SMM150 Quantitative Methods for Finance 14 Let’s first discuss how we can tackle Problem #2: There is a way of correcting the standard errors so that our interval estimates and hypothesis tests are valid We can employ the so-called White’s heteroscedasticity-consistent standard errors (or simply robust standard errors ...

What to do if Assumptions are Violated?

week 7 3 Transformations • Transformations are used as a remedy for non-linearity, non-constant variance and non-normality. • If relationship is non-linear but variance of Y is approximately constant over X, try to find a transformation of X that results in a linear relationship.

Why does Model 4 violate the no endogeneity assumption?

Model 4 violates the no endogeneity assumption because researchers omitted sqft from the model. Remember, when relevant variables are omitted from the model, it gets absorbed by the error term.

Does multicollinearity affect prediction?

Violating multicollinearity does not impact prediction, but can impact inference. For example, p-values typically become larger for highly correlated covariates, which can cause statistically significant variables to lack significance. Violating linearity can affect prediction and inference.

Satyabrata Mishra

Parametric models have lost their sheen in the age of Deep Learning. But for smaller datasets, and when interpretability outweighs predictive power, models like linear and logistic regressions still hold the sway.

1. Linear relationship

Due to the parametric nature of linear regression, we are limited by the straight line relationship between X and Y. If the relationship is non-linear, all the conclusions drawn from the model are wrong, and this leads to wide divergence between training and test data.

2. Additive relationship between dependent variables

This is perhaps the most violated assumption, and the primary reason why tree models outperform linear models on a huge scale. Since output of linear regression/logistic regression is dependent on the sum of the variables multiplied by their coefficients, the assumption is that each variable is independent of the other. Which is rarely the case.

3. Autocorrelation

Autocorrelation refers to no correlation between residual errors. Residual errors are just (y - y^); and since y is constant; Autocorrelation refers to no correlation between y^s, or no correlation between different rows.

4. Collinearity

Collinearity is the presence of highly correlated variables within X. Again, this is one of the effects that don't really affect the prediction to a great extent. But it really creates a mess with the model's interpretability.

The Prosecution's Summary

Prosecutor: Ladies and gentlemen, we’ve presented a slew of evidence in this trial. You’ve seen, with your own eyes, every possible heinous violation of the assumptions for regression in the defendant’s model. Here’s what we’ve shown, in a nutshell:

The Defense's Summary

Defense: The prosecution makes it sound so easy doesn’t it? Just choose Graphs > Four in one, they say. Just transform the data, they say. But we all know real life doesn’t always work out quite so neatly.

What is the first assumption of linear regression?

The first assumption of linear regression is that there is a linear relationship between the independent variable, x, and the independent variable, y.

What to do if the normality assumption is violated?

If the normality assumption is violated, you have a few options: First, verify that any outliers aren’t having a huge impact on the distribution. If there are outliers present, make sure that they are real values and that they aren’t data entry errors.

How to check if assumption is met?

There are two common ways to check if this assumption is met: 1. Check the assumption visually using Q-Q plots. A Q-Q plot, short for quantile-quantile plot, is a type of plot that we can use to determine whether or not the residuals of a model follow a normal distribution.

What is linear regression?

Linear regression is a useful statistical method we can use to understand the relationship between two variables, x and y. However, before we conduct linear regression, we must first make sure that four assumptions are met:

How to do nonlinear transformations?

Common examples include taking the log, the square root, or the reciprocal of the independent and/or dependent variable. 2. Add another independent variable to the model.

How to redefine dependent variable?

Redefine the dependent variable. One common way to redefine the dependent variable is to use a rate, rather than the raw value. For example, instead of using the population size to predict the number of flower shops in a city, we may instead use population size to predict the number of flower shops per capita.

Does heteroscedasticity increase the variance of the regression coefficient estimates?

Specifically, heteroscedasticity increases the variance of the regression coefficient estimates, but the regression model doesn’t pick up on this. This makes it much more likely for a regression model to declare that a term in the model is statistically significant, when in fact it is not.

Why does Model 4 violate the no endogeneity assumption?

Model 4 violates the no endogeneity assumption because researchers omitted sqft from the model. Remember, when relevant variables are omitted from the model, it gets absorbed by the error term.

Does multicollinearity affect prediction?

Violating multicollinearity does not impact prediction, but can impact inference. For example, p-values typically become larger for highly correlated covariates, which can cause statistically significant variables to lack significance. Violating linearity can affect prediction and inference.

Linear Relationship

Additive Relationship Between Dependent Variables

Autocorrelation

Collinearity