How to implement the backpropagation using Python and NumPy?

Let’s code a Neural Network in plain NumPy

- Mysteries of Neural Networks Part III. U sing high-level frameworks like Keras, TensorFlow or PyTorch allows us to build very complex models quickly.

- First things first. ...

- Initiation of neural network layers. ...

- Activation functions. ...

- Forward propagation. ...

- Loss function. ...

- Backward propagation. ...

- Updating parameters values. ...

- Putting things together. ...

- David vs Goliath. ...

How to forecast with neural network?

- What is the time horizon required for my predictions?

- What is the temporal frequency required for my predictions?

- Can forecasts be updated frequently over time or should they produce only once and remain static over time?

How to update the bias in neural network backpropagation?

Stochastic Gradient Descent

- Define a cost function, with a vector as input (weight or bias vector)

- Start at a random point along the x-axis and step in any direction. ...

- Calculate the gradient using backpropagation, as explained earlier

How to build a neural network from scratch?

- Input layer: In this layer, I input my data set consisting of 28×28 images. ...

- Hidden layer 1: In this layer, I reduce the number of nodes from 784 in the input layer to 128 nodes. ...

- Hidden layer 2: In this layer, I decide to go with 64 nodes, from the 128 nodes in the first hidden layer. ...

What is backpropagation neural network?

Back-propagation is the essence of neural net training. It is the practice of fine-tuning the weights of a neural net based on the error rate (i.e. loss) obtained in the previous epoch (i.e. iteration). Proper tuning of the weights ensures lower error rates, making the model reliable by increasing its generalization.

What is back propagation algorithm in machine learning?

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally. These classes of algorithms are all referred to generically as "backpropagation".

What is backpropagation with example?

Backpropagation is an algorithm that back propagates the errors from output nodes to the input nodes. Therefore, it is simply referred to as backward propagation of errors. It uses in the vast applications of neural networks in data mining like Character recognition, Signature verification, etc.

What is backpropagation and its process?

Backpropagation, short for "backward propagation of errors," is an algorithm for supervised learning of artificial neural networks using gradient descent. Given an artificial neural network and an error function, the method calculates the gradient of the error function with respect to the neural network's weights.

What is objective of back propagation algorithm?

Explanation: The objective of backpropagation algorithm is to to develop learning algorithm for multilayer feedforward neural network, so that network can be trained to capture the mapping implicitly.

Why is it called backpropagation?

It's called back-propagation (BP) because, after the forward pass, you compute the partial derivative of the loss function with respect to the parameters of the network, which, in the usual diagrams of a neural network, are placed before the output of the network (i.e. to the left of the output if the output of the ...

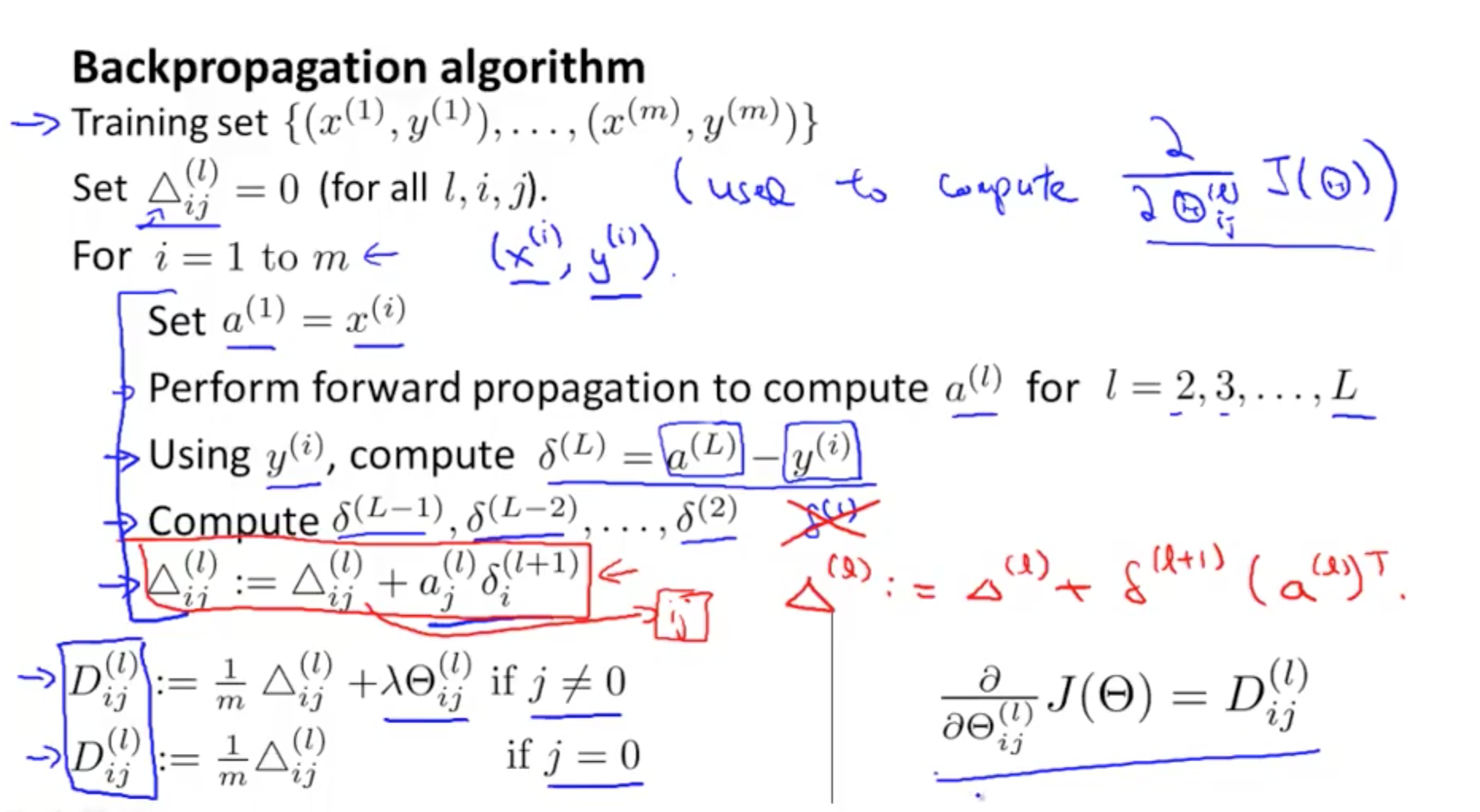

What are the steps in backpropagation algorithm?

Below are the steps involved in Backpropagation: Step – 1: Forward Propagation. Step – 2: Backward Propagation. Step – 3: Putting all the values together and calculating the updated weight value....The above network contains the following:two inputs.two hidden neurons.two output neurons.two biases.

What are features of back propagation algorithm?

The main features of Backpropagation are the iterative, recursive and efficient method through which it calculates the updated weight to improve the network until it is not able to perform the task for which it is being trained.

What is the other name of back propagation algorithm?

An important goal of backpropagation is to give data scientists insight into how changing a weight function will change loss functions and the overall behaviour of the neural network. The term is sometimes used as a synonym for "error correction."

What is back propagation in neural network Mcq?

Explanation: Back propagation is the transmission of error back through the network to allow weights to be adjusted so that the network can learn. Explanation: RNN (Recurrent neural network) topology involves backward links from output to the input and hidden layers.

What is backpropagation used for?

There are 2 main types of the backpropagation algorithm: Traditional backpropagation is used for static problems with a fixed input and a fixed output all the time, like predicting the class of an image. In this case, the input image and the output class never change.

What is the most popular algorithm for training artificial neural networks?

Hopefully, now you see why backpropagation is the most popular algorithm for training artificial neural networks. It’s quite powerful, and the way it works inside is fascinating. Thanks for reading!

What is the learnable parameter by which the network makes a prediction?

The weights are the learnable parameter by which the network makes a prediction. If the weights are good, then the network makes accurate predictions with less error. Otherwise, the weight should be updated to reduce the error. Assume that a neuron N 1 at layer 1 is connected to another neuron N 2 at layer 2.

How many layers are there in a neural network?

The network has an input layer, 2 hidden layers, and an output layer. In the figure, the network architecture is presented horizontally so that each layer is represented vertically from left to right. Each layer consists of 1 or more neurons represented by circles.

When was backpropagation first used?

Backpropagation algorithm is probably the most fundamental building block in a neural network. It was first introduced in 1960s and almost 30 years later (1989) popularized by Rumelhart, Hinton and Williams in a paper called “Learning representations by back-propagating errors”. The algorithm is used to effectively train a neural network ...

What is the final part of a neural network?

The final part of a neural network is the output layer which produces the predicated value. In our simple example, it is presented as a single neuron, colored in blue and evaluated as follows:

What is backpropagation in neural networks?

Backpropagation relies on the ability to express an entire neural network as a function of a function of a function... and so on, allowing the chain rule to be applied recursively.

What is backpropagation used for?

Backpropagation and its variants such as backpropagation through time are widely used for training nearly all kinds of neural networks , and have enabled the recent surge in popularity of deep learning. A small selection of example applications of backpropagation are presented below.

Where is the derivative of C in a neural network?

Since a neural network has many layers, the derivative of C at a point in the middle of the network may be very far removed from the loss function, which is calculated after the last layer.

Who invented the backpropagation method?

Backpropagation History. Augustin-Louis Cauchy (1789-1857), inventor of gradient descent. Image is in the public domain. In 1847, the French mathematician Baron Augustin-Louis Cauchy developed a method of gradient descent for solving simultaneous equations.

What does it mean when the first two terms in the chain rule expression for layer 1 are shared with the gradient calculation for

The first two terms in the chain rule expression for layer 1 are shared with the gradient calculation for layer 2. This means that computationally, it is not necessary to re-calculate the entire expression. Only the terms that are particular to the current layer must be evaluated.

What is backpropagation algorithm?

Backpropagation algorithms are a set of methods used to efficiently train artificial neural networks following a gradient descent approach which exploits the chain rule.

What are the main features of backpropagation?

The main features of Backpropagation are the iterative, recursive and efficient method through which it calculates the updated weight to improve the network until it is not able to perform the task for which it is being trained.

Define The Neural Network Model

Forward Propagation and Evaluation

- The equations above form network’s forward propagation. Here is a short overview: The final step in a forward pass is to evaluate the predicted output sagainst an expected output y. The output y is part of the training dataset (x, y) wherex is the input (as we saw in the previous section). Evaluation between s and y happens through a cost function. This can be as simple as MSE (me…

Backpropagation and Computing Gradients

- According to the paper from 1989, backpropagation: and In other words, backpropagation aims to minimize the cost function by adjusting network’s weights and biases.The level of adjustment is determined by the gradients of the cost function with respect to those parameters. One question may arise — why computing gradients? To answer this, we first n...